About Course

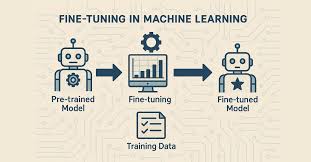

This session offers a hands-on, task-based learning experience designed to help you apply concepts in real time. Each day focuses on a specific, practical outcome—whether it’s fine-tuning a language model, scaling an ad campaign, or building with AI tools—ensuring you gain not just theoretical knowledge but tangible results. Guided by expert-led instruction, the session is structured to help you build, deploy, and iterate using industry-standard tools and workflows.

Course Content

Day 1

-

Environment Setup + HuggingFace CLI Task